OpenVINO

Deploy High-Performance Deep Learning Inference

Your AI and Computer Vision Apps...Now Faster

Develop applications and solutions that emulate human vision with the Intel® Distribution of OpenVINO™ toolkit. Based on convolutional neural networks (CNN), the toolkit extends workloads across Intel® hardware (including accelerators) and maximizes performance.

- Enables deep learning inference from edge to cloud

- Accelerates AI workloads, including computer vision, audio, speech, language, and recommendation systems

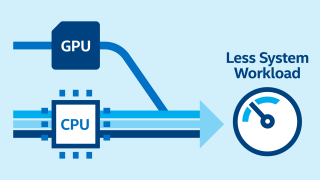

- Supports heterogeneous execution across Intel® architecture and AI accelerators—CPU, iGPU, Intel® Movidius™ Vision Processing Unit (VPU), FPGA, and Intel® Gaussian & Neural Accelerator (Intel® GNA)—using a common API

- Speeds up time to market via a library of functions and preoptimized kernels

- Includes optimized calls for OpenCV, OpenCL™ kernels, and other industry tools and libraries

|

|

|

| Deep Learning Inference | Traditional Computer Vision | Hardware Acceleration |

| Accelerate and deploy neural network models across Intel® platforms with a built-in model optimizer for pretrained models and an inference engine runtime for hardware-specific acceleration. |

Develop and optimize classic computer vision applications built with the OpenCV library and other industry tools. | Harness the performance of Intel®-based accelerators: CPUs, iGPUs, FPGAs, VPUs, Intel® Gaussian & Neural Accelerators, and IPUs. |